Q-Learning: Interactive Reinforcement Learning Foundation

Published: July 6, 2025

|

Last Updated: July 7, 2025

What is Q-Learning?

Q-Learning is a model-free reinforcement learning algorithm that teaches agents to make optimal decisions in unknown environments. Unlike supervised learning, the agent learns through trial and error, discovering which actions lead to the best long-term rewards without needing a teacher or pre-labeled data.

The Q-Learning Formula

Q-Learning Update Formula:

Q(s,a) ← Q(s,a) + α[r + γ max Q(s',a') - Q(s,a)]

- s: Current position

- a: Action taken (↑→↓←)

- r: Immediate reward (-1 step, +30 goal, -30 wall)

- s': New position after action

- α: Learning rate (0.1)

- γ: Discount factor (0.9)

Example Calculation:

Agent is at position (2,3) with current Q-value of 5.2 for moving right. Agent moves right, gets reward -1 (the standard step penalty - each move costs -1 to encourage finding shorter paths), and lands at (3,3).

Before (2,3)

After (3,3)

Step 1: Current Q-value for right action = 5.2

Step 2: Reward received = -1 (step penalty - encourages shorter paths)

Step 3: Best Q-value at new position (3,3) = 12.5 (up direction)

Step 4: Apply formula:

New Q = 5.2 + 0.1 × [-1 + 0.9 × 12.5 - 5.2]

New Q = 5.2 + 0.1 × [-1 + 11.25 - 5.2]

New Q = 5.2 + 0.1 × [5.05]

New Q = 5.2 + 0.505 = 5.7

The Q-value for moving right from (2,3) improved from 5.2 to 5.7 because the agent discovered a position with excellent future prospects!

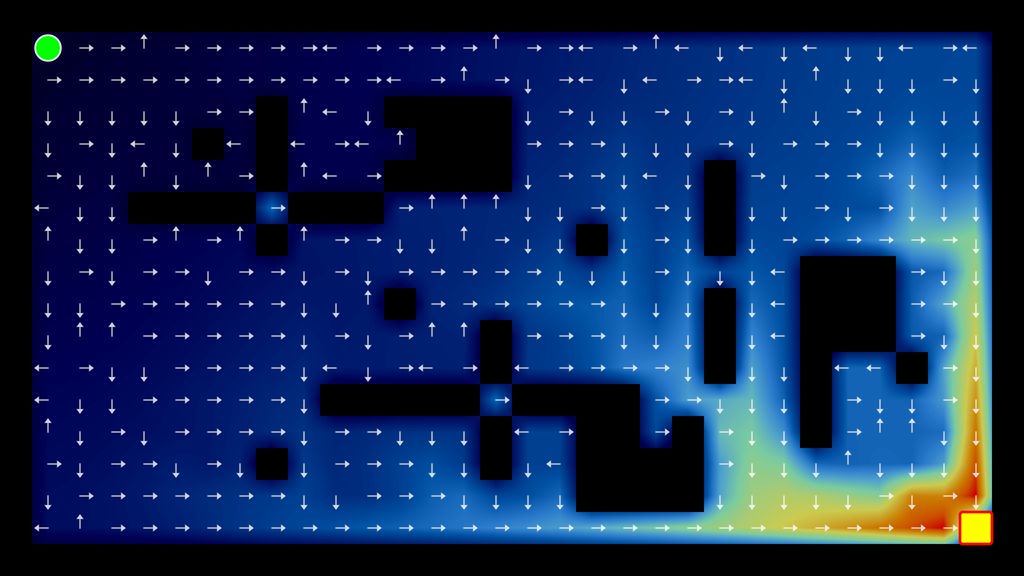

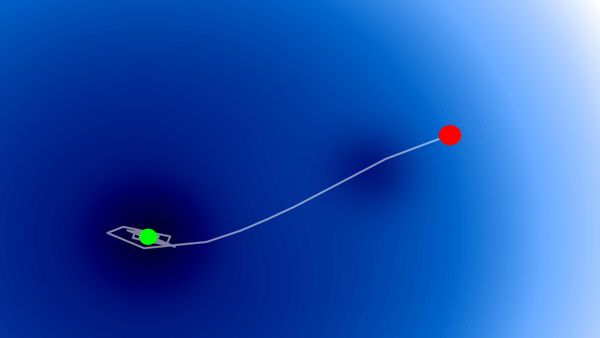

Interactive Q-Learning Demonstration

STEP Mode: Watch each individual action and Q-value update. AUTO Mode: Rapid learning with continuous episodes.

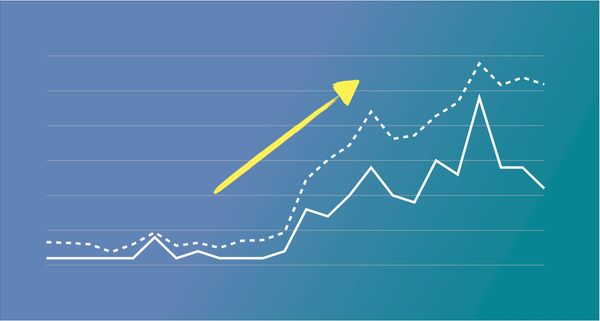

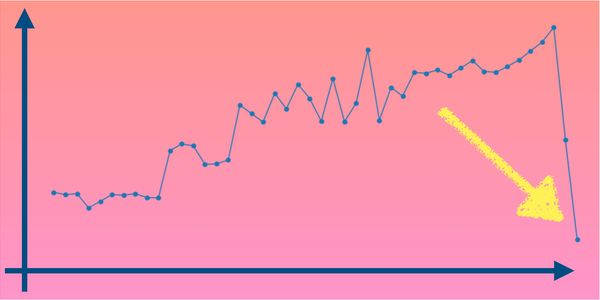

Learning Progress (Total Reward per Episode)

Complete Implementation

Here's a full Q-Learning implementation with training loop:

import random

import numpy as np

class QLearningAgent:

def __init__(self, grid_size=10, alpha=0.1, gamma=0.9, epsilon=0.1):

self.grid_size = grid_size

self.alpha = alpha # Learning rate

self.gamma = gamma # Discount factor

self.epsilon = epsilon # Exploration rate

self.q_table = {} # Q-values for each (state, action) pair

self.actions = ['up', 'right', 'down', 'left']

# Initialize Q-table

for x in range(grid_size):

for y in range(grid_size):

for action in self.actions:

self.q_table[(x, y, action)] = 0.0

def choose_action(self, x, y):

"""Choose action using epsilon-greedy policy"""

if random.random() < self.epsilon:

return random.choice(self.actions) # Explore

# Exploit: choose action with highest Q-value

q_values = [self.q_table.get((x, y, action), 0) for action in self.actions]

max_q = max(q_values)

best_actions = [action for action, q in zip(self.actions, q_values) if q == max_q]

return random.choice(best_actions)

def update_q_value(self, state, action, reward, next_state):

"""Update Q-value using Q-learning formula"""

x, y = state

next_x, next_y = next_state

current_q = self.q_table.get((x, y, action), 0)

next_q_values = [self.q_table.get((next_x, next_y, a), 0) for a in self.actions]

max_next_q = max(next_q_values) if next_q_values else 0

# Q(s,a) = Q(s,a) + α[r + γ max Q(s',a') - Q(s,a)]

new_q = current_q + self.alpha * (reward + self.gamma * max_next_q - current_q)

self.q_table[(x, y, action)] = new_q

def train(self, walls, goal, episodes=1000):

"""Train the agent for specified number of episodes"""

for episode in range(episodes):

x, y = 0, 0 # Start position

for step in range(200): # Max steps per episode

action = self.choose_action(x, y)

# Calculate new position and reward

moves = {'up': (0, -1), 'right': (1, 0), 'down': (0, 1), 'left': (-1, 0)}

dx, dy = moves[action]

new_x, new_y = x + dx, y + dy

if (new_x < 0 or new_x >= self.grid_size or

new_y < 0 or new_y >= self.grid_size):

reward = -30 # Fell off grid

new_x, new_y = x, y

elif (new_x, new_y) in walls:

reward = -30 # Hit wall

new_x, new_y = x, y

elif (new_x, new_y) == goal:

reward = 30 # Reached goal

else:

reward = -1 # Normal step

# Update Q-value and move

self.update_q_value((x, y), action, reward, (new_x, new_y))

x, y = new_x, new_y

if (x, y) == goal or reward == -30:

break

# Usage

walls = [(1,1), (2,1), (3,1)]

goal = (9, 8)

agent = QLearningAgent()

agent.train(walls, goal, episodes=500)Pros and Cons of Q-Learning

Advantages

- Model-free: No need to know environment dynamics

- Guaranteed convergence under right conditions

- Simple and intuitive to understand

- Can learn optimal policies through exploration

Limitations

- Only works with discrete states and actions

- Slow convergence in large state spaces

- Memory grows with state-action combinations

- Requires extensive exploration to learn well

Key Takeaways

- Q-values predict future rewards - they help the agent choose the best action

- Learning happens through experience - each action updates the agent's knowledge

- Values propagate backward - areas near rewards become valuable first

- Exploration vs exploitation - the agent must try new things while using what it knows

Leave comment

Comments

Check out other blog posts

2025/07/06

Optimization Algorithms: SGD, Momentum, and Adam

2025/07/05

Building a Japanese BPE Tokenizer: From Characters to Subwords

2024/06/19

Create A Simple and Dynamic Tooltip With Svelte and JavaScript

2024/06/17

Create an Interactive Map of Tokyo with JavaScript

2024/06/14

How to Easily Fix Japanese Character Issue in Matplotlib

2024/06/13

Book Review | Talking to Strangers: What We Should Know about the People We Don't Know by Malcolm Gladwell

2024/06/07

Most Commonly Used 3,000 Kanjis in Japanese

2024/06/07

Replace With Regex Using VSCode

2024/06/06

Do Not Use Readable Store in Svelte

2024/06/05

Increase Website Load Speed by Compressing Data with Gzip and Pako

2024/05/31

Find the Word the Mouse is Pointing to on a Webpage with JavaScript

2024/05/29

Create an Interactive Map with Svelte using SVG

2024/05/28

Book Review | Originals: How Non-Conformists Move the World by Adam Grant & Sheryl Sandberg

2024/05/27

How to Algorithmically Solve Sudoku Using Javascript

2024/05/26

How I Increased Traffic to my Website by 10x in a Month

2024/05/24

Life is Like Cycling

2024/05/19

Generate a Complete Sudoku Grid with Backtracking Algorithm in JavaScript

2024/05/16

Why Tailwind is Amazing and How It Makes Web Dev a Breeze

2024/05/15

Generate Sitemap Automatically with Git Hooks Using Python

2024/05/14

Book Review | Range: Why Generalists Triumph in a Specialized World by David Epstein

2024/05/13

What is Svelte and SvelteKit?

2024/05/12

Internationalization with SvelteKit (Multiple Language Support)

2024/05/11

Reduce Svelte Deploy Time With Caching

2024/05/10

Lazy Load Content With Svelte and Intersection Oberver

2024/05/10

Find the Optimal Stock Portfolio with a Genetic Algorithm

2024/05/09

Convert ShapeFile To SVG With Python

2024/05/08

Reactivity In Svelte: Variables, Binding, and Key Function

2024/05/07

Book Review | The Art Of War by Sun Tzu

2024/05/06

Specialists Are Dead. Long Live Generalists!

2024/05/03

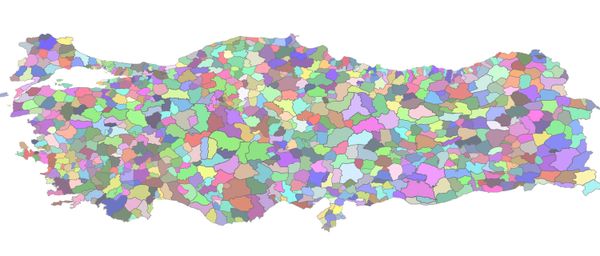

Analyze Voter Behavior in Turkish Elections with Python

2024/05/01

Create Turkish Voter Profile Database With Web Scraping

2024/04/30

Make Infinite Scroll With Svelte and Tailwind

2024/04/29

How I Reached Japanese Proficiency In Under A Year

2024/04/25

Use-ready Website Template With Svelte and Tailwind

2024/01/29

Lazy Engineers Make Lousy Products

2024/01/28

On Greatness

2024/01/28

Converting PDF to PNG on a MacBook

2023/12/31

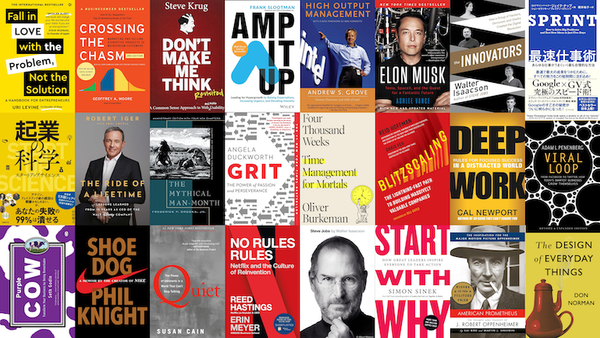

Recapping 2023: Compilation of 24 books read

2023/12/30

Create a Photo Collage with Python PIL

2024/01/09

Detect Device & Browser of Visitors to Your Website

2024/01/19

Anatomy of a ChatGPT Response