Building a Japanese BPE Tokenizer: From Characters to Subwords

Published: July 5, 2025

What You'll Learn

- What Byte Pair Encoding (BPE) is and why it's crucial for modern NLP

- How BPE reduces vocabulary size while preserving meaning

- Building a complete BPE tokenizer for Japanese text

- Interactive examples showing the algorithm in action

What is Byte Pair Encoding?

Byte Pair Encoding (BPE) is a subword tokenization algorithm that powers modern language models like GPT and BERT. Originally designed for data compression, it's now essential for NLP.

The algorithm is simple: start with individual characters and iteratively merge the most frequent adjacent pairs until reaching your target vocabulary size. This balances character-level granularity with word-level semantics.

Why Do We Need BPE?

Traditional tokenization approaches have significant limitations:

Word-Level Issues

- • Huge vocabulary sizes (100K+ words)

- • Can't handle out-of-vocabulary words

- • Struggles with morphologically rich languages

- • Poor handling of rare words

BPE Benefits

- • Manageable vocabulary (5K-50K tokens)

- • No out-of-vocabulary words

- • Captures subword patterns

- • Language-agnostic approach

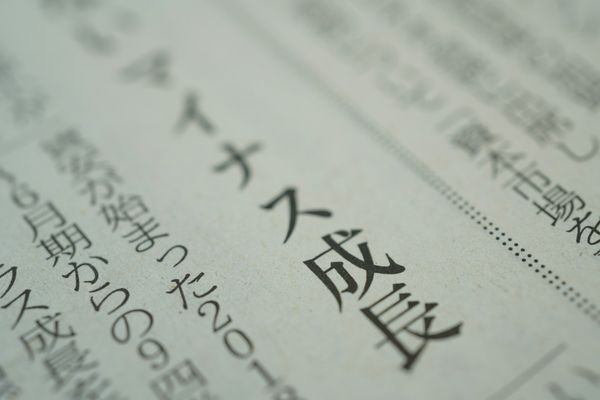

For Japanese specifically, BPE is particularly valuable because it can learn common kanji combinations, particles, and grammatical patterns automatically, without requiring explicit linguistic knowledge.

Interactive BPE Demo

Let's see how BPE works step by step with Japanese text:

Original Text: 最近TVでも人気者のお笑い

Step 0 of 5

Implementation: Loading Japanese Data

I used the Livedoor News Corpus containing Japanese news articles - perfect for training a tokenizer:

# Download and read Japanese news corpus

import tarfile

import urllib.request

import os

filename = "ldcc-20140209.tar.gz"

if not os.path.exists(filename):

print("Downloading...")

urllib.request.urlretrieve("https://www.rondhuit.com/download/ldcc-20140209.tar.gz", filename)

text = ""

articles = []

article_count_limit = 1000

with tarfile.open(filename, "r") as tar:

for member in tar.getmembers():

if member.isfile() and member.name.endswith(".txt"):

file_content = tar.extractfile(member).read().decode("utf-8")

lines = file_content.split("\n")

if len(lines) >= 4: # Skip URL, date, title

content = "\n".join(lines[3:]).strip()

if content:

articles.append(content)

text += content + "\n\n"

print(f"Total text length: {len(text):,}")

print(f"Total articles: {len(articles):,}")

print(f"Initial vocab size: {len(set(text)):,}")Dataset Statistics

- • 659,423 total characters

- • 1,000 news articles

- • 2,913 unique characters (initial vocabulary)

Core BPE Algorithm

The BPE algorithm consists of two main functions: finding frequent pairs and merging them:

def get_pairs(tokens):

"""Find all adjacent character pairs and their frequencies"""

pairs = defaultdict(int)

for i in range(len(tokens) - 1):

pairs[(tokens[i], tokens[i + 1])] += 1

return pairs

def merge_tokens(tokens, pair):

"""Merge the most frequent pair into a single token"""

new_tokens = []

i = 0

while i < len(tokens):

if (i < len(tokens) - 1 and

tokens[i] == pair[0] and tokens[i + 1] == pair[1]):

new_tokens.append(pair[0] + pair[1]) # Merge pair

i += 2

else:

new_tokens.append(tokens[i])

i += 1

return new_tokensTraining the Tokenizer

We start with individual characters and iteratively merge the most frequent pairs:

# Initialize with character-level tokens

tokens = list(text) # Split into individual characters

vocab = set(tokens)

target_vocab_size = 5000

while len(vocab) < target_vocab_size:

# Find most frequent adjacent pair

pairs = get_pairs(tokens)

if not pairs:

break

best_pair = max(pairs, key=pairs.get)

# Merge all instances of this pair

tokens = merge_tokens(tokens, best_pair)

vocab.add("".join(best_pair))

if merge_count % 100 == 0:

print(f"Merge {merge_count}: vocab {len(vocab)}, tokens {len(tokens)}")| Merge # | Pair Merged | New Token | Frequency |

|---|---|---|---|

| 1 | 最 + 近 | 最近 | 847 |

| 2 | T + V | TV | 523 |

| 3 | で + も | でも | 412 |

| 4 | 人 + 気 | 人気 | 389 |

| 5 | 者 + の | 者の | 301 |

Tokenizing New Text

Once trained, we can apply our BPE vocabulary to tokenize new text:

def tokenize_with_bpe(text):

"""Apply learned BPE merges to new text"""

tokens = list(text) # Start with characters

changed = True

while changed:

changed = False

new_tokens = []

i = 0

while i < len(tokens):

# Check if adjacent pair exists in our vocabulary

if (i < len(tokens) - 1 and

tokens[i] + tokens[i + 1] in vocab):

new_tokens.append(tokens[i] + tokens[i + 1])

i += 2

changed = True

else:

new_tokens.append(tokens[i])

i += 1

tokens = new_tokens

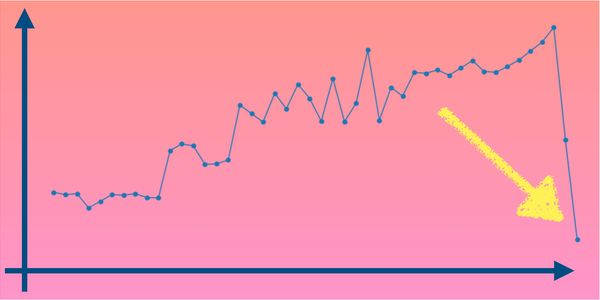

return tokensResults & Performance

The results demonstrate BPE's effectiveness for Japanese text:

# Training Results:

Total text length: 659,423

Total articles: 1,000

Initial vocab size: 2,913

Post-BPE vocab size: 5,000

# Compression Results:

Original characters: 659,423

BPE tokens: 379,352

Compression: 42.5%Input

659,423

characters

Output

379,352

BPE tokens

Compression

42.5%

reduction

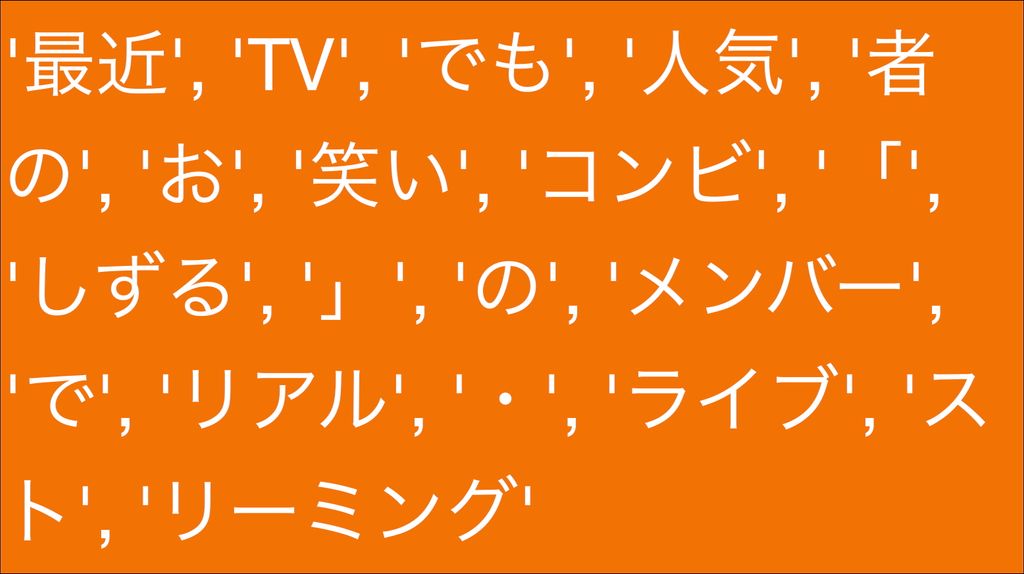

Example Tokenization

Here's how a Japanese sentence gets tokenized with our trained BPE model:

Original: 最近TVでも人気者のお笑いコンビ「しずる」のメンバーで...

BPE Tokens:

Notice how BPE automatically learned meaningful subword units like "最近" (recently), "人気" (popular), and "者の" (person's) without any linguistic preprocessing!

Why This Matters for Japanese

Japanese presents unique challenges for tokenization:

- No spaces between words: BPE learns word boundaries automatically

- Multiple writing systems: Handles hiragana, katakana, and kanji seamlessly

- Compound words: Learns frequent kanji combinations as single tokens

- Particles and grammar: Captures grammatical patterns like "の", "で", "も"

Try It Yourself

The complete implementation is available on GitHub with an interactive Jupyter notebook. You can:

- Run the BPE training on the Japanese corpus

- Experiment with different vocabulary sizes

- Test tokenization on your own Japanese text

- Analyze compression statistics

Conclusion

BPE strikes the perfect balance between character-level robustness and word-level semantics. By starting simple and building complexity through data-driven merging, it creates vocabularies that are both compact and meaningful.

For Japanese text specifically, BPE's ability to automatically discover linguistic patterns makes it an ideal choice for modern NLP applications. The 42.5% compression we achieved while maintaining semantic meaning demonstrates its effectiveness.

Whether you're building language models, machine translation systems, or text analysis tools, understanding and implementing BPE is essential for working with any language – especially morphologically rich ones like Japanese.

Leave comment

Comments

There are no comments at the moment.

Check out other blog posts

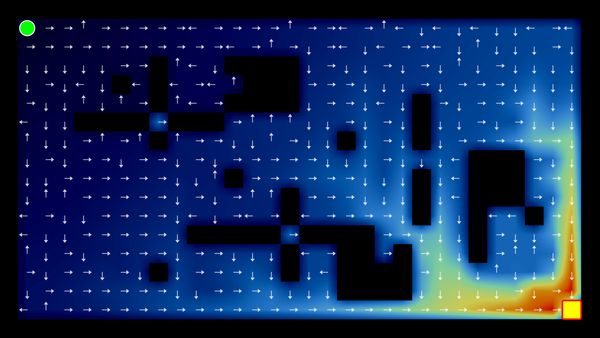

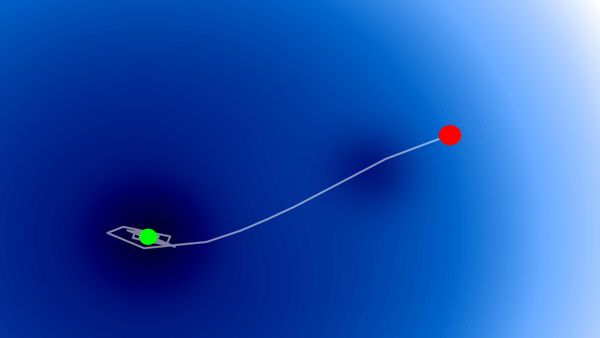

2025/07/07

Q-Learning: Interactive Reinforcement Learning Foundation

2025/07/06

Optimization Algorithms: SGD, Momentum, and Adam

2024/06/19

Create A Simple and Dynamic Tooltip With Svelte and JavaScript

2024/06/17

Create an Interactive Map of Tokyo with JavaScript

2024/06/14

How to Easily Fix Japanese Character Issue in Matplotlib

2024/06/13

Book Review | Talking to Strangers: What We Should Know about the People We Don't Know by Malcolm Gladwell

2024/06/07

Most Commonly Used 3,000 Kanjis in Japanese

2024/06/07

Replace With Regex Using VSCode

2024/06/06

Do Not Use Readable Store in Svelte

2024/06/05

Increase Website Load Speed by Compressing Data with Gzip and Pako

2024/05/31

Find the Word the Mouse is Pointing to on a Webpage with JavaScript

2024/05/29

Create an Interactive Map with Svelte using SVG

2024/05/28

Book Review | Originals: How Non-Conformists Move the World by Adam Grant & Sheryl Sandberg

2024/05/27

How to Algorithmically Solve Sudoku Using Javascript

2024/05/26

How I Increased Traffic to my Website by 10x in a Month

2024/05/24

Life is Like Cycling

2024/05/19

Generate a Complete Sudoku Grid with Backtracking Algorithm in JavaScript

2024/05/16

Why Tailwind is Amazing and How It Makes Web Dev a Breeze

2024/05/15

Generate Sitemap Automatically with Git Hooks Using Python

2024/05/14

Book Review | Range: Why Generalists Triumph in a Specialized World by David Epstein

2024/05/13

What is Svelte and SvelteKit?

2024/05/12

Internationalization with SvelteKit (Multiple Language Support)

2024/05/11

Reduce Svelte Deploy Time With Caching

2024/05/10

Lazy Load Content With Svelte and Intersection Oberver

2024/05/10

Find the Optimal Stock Portfolio with a Genetic Algorithm

2024/05/09

Convert ShapeFile To SVG With Python

2024/05/08

Reactivity In Svelte: Variables, Binding, and Key Function

2024/05/07

Book Review | The Art Of War by Sun Tzu

2024/05/06

Specialists Are Dead. Long Live Generalists!

2024/05/03

Analyze Voter Behavior in Turkish Elections with Python

2024/05/01

Create Turkish Voter Profile Database With Web Scraping

2024/04/30

Make Infinite Scroll With Svelte and Tailwind

2024/04/29

How I Reached Japanese Proficiency In Under A Year

2024/04/25

Use-ready Website Template With Svelte and Tailwind

2024/01/29

Lazy Engineers Make Lousy Products

2024/01/28

On Greatness

2024/01/28

Converting PDF to PNG on a MacBook

2023/12/31

Recapping 2023: Compilation of 24 books read

2023/12/30

Create a Photo Collage with Python PIL

2024/01/09

Detect Device & Browser of Visitors to Your Website

2024/01/19

Anatomy of a ChatGPT Response