Optimization Algorithms: SGD, Momentum, and Adam

Published: July 6, 2025

What You'll Learn

- How gradient descent optimizers work and differ from each other

- SGD, Momentum, and Adam algorithms with interactive visualizations

- Parameter tuning and convergence behavior

- Implementation details and practical usage

Optimization Algorithms

Optimizers determine how neural networks learn by updating parameters to minimize loss. The choice of optimizer significantly affects training speed and final performance.

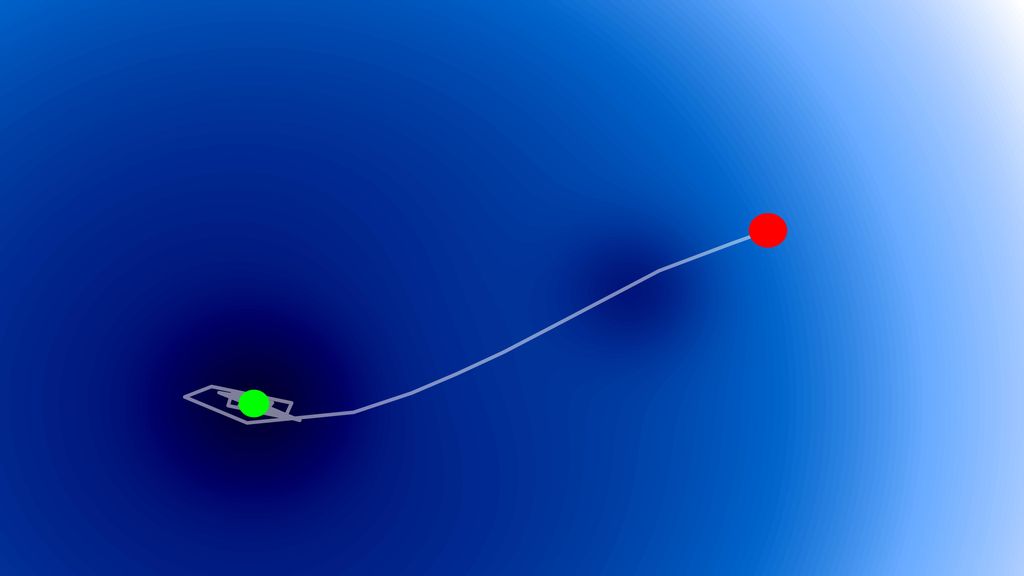

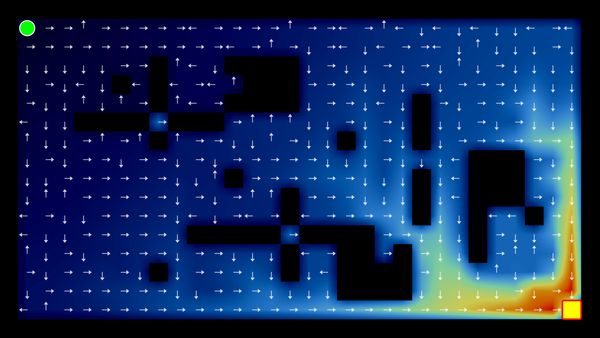

Interactive Optimizer Comparison

Watch how different optimizers navigate toward the minimum of a simple quadratic function:

Controls

Visualization

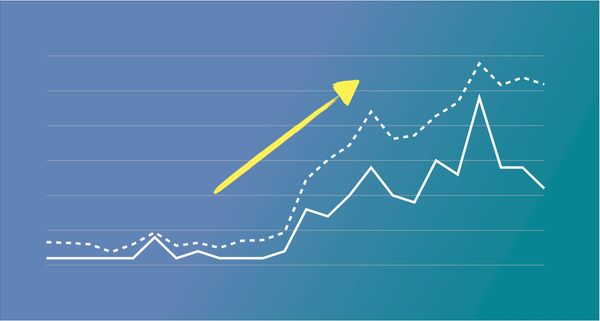

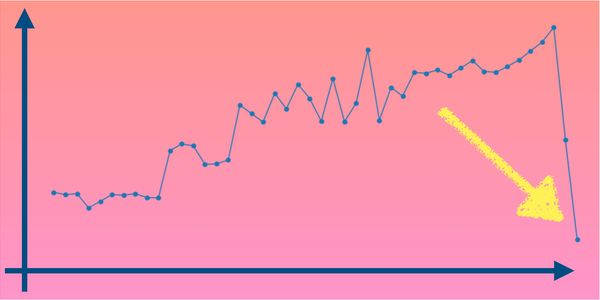

Loss Over Time

Step 0 / 100 | SGD Loss: 9.0000 | Momentum Loss: 9.0000 | Adam Loss: 9.0000

Stochastic Gradient Descent (SGD)

The simplest optimizer. Updates parameters directly proportional to the gradient magnitude.

Key Formula:

θ = θ - α * ∇f(θ)

Where α is learning rate and ∇f(θ) is the gradient

class SGD:

def __init__(self, learning_rate=0.01):

self.lr = learning_rate

def update(self, params, gradients):

for i in range(len(params)):

params[i] -= self.lr * gradients[i]

return paramsCharacteristics:

- Pros: Simple, memory efficient, works well for convex problems

- Cons: Can oscillate in ravines, sensitive to learning rate

- Best for: Large datasets, when memory is limited

SGD with Momentum

Adds momentum to SGD by accumulating a weighted average of past gradients. This helps accelerate convergence and reduces oscillations.

Key Formulas:

v = β * v + ∇f(θ)

θ = θ - α * v

Where β is momentum coefficient (typically 0.9)

class SGDMomentum:

def __init__(self, learning_rate=0.01, momentum=0.9):

self.lr = learning_rate

self.momentum = momentum

self.velocity = None

def update(self, params, gradients):

if self.velocity is None:

self.velocity = [0] * len(params)

for i in range(len(params)):

self.velocity[i] = (self.momentum * self.velocity[i] +

gradients[i])

params[i] -= self.lr * self.velocity[i]

return paramsCharacteristics:

- Pros: Faster convergence, reduced oscillations, overcomes local minima better

- Cons: Extra hyperparameter, may overshoot minimum

- Best for: Non-convex optimization, when oscillations are problematic

Adam (Adaptive Moment Estimation)

Combines momentum with adaptive learning rates. Maintains separate learning rates for each parameter based on first and second moment estimates.

Key Formulas:

m = β₁ * m + (1-β₁) * ∇f(θ)

v = β₂ * v + (1-β₂) * ∇f(θ)²

m̂ = m / (1-β₁ᵗ), v̂ = v / (1-β₂ᵗ)

θ = θ - α * m̂ / (√v̂ + ε)

Default: β₁=0.9, β₂=0.999, ε=1e-8

class Adam:

def __init__(self, learning_rate=0.001, beta1=0.9, beta2=0.999):

self.lr = learning_rate

self.beta1 = beta1

self.beta2 = beta2

self.epsilon = 1e-8

self.m = None # First moment

self.v = None # Second moment

self.t = 0 # Time step

def update(self, params, gradients):

if self.m is None:

self.m = [0] * len(params)

self.v = [0] * len(params)

self.t += 1

for i in range(len(params)):

# Update moments

self.m[i] = self.beta1 * self.m[i] + (1 - self.beta1) * gradients[i]

self.v[i] = self.beta2 * self.v[i] + (1 - self.beta2) * gradients[i]**2

# Bias correction

m_hat = self.m[i] / (1 - self.beta1**self.t)

v_hat = self.v[i] / (1 - self.beta2**self.t)

# Update parameters

params[i] -= self.lr * m_hat / (math.sqrt(v_hat) + self.epsilon)

return paramsCharacteristics:

- Pros: Adaptive learning rates, robust to hyperparameters, works well out-of-the-box

- Cons: Higher memory usage, may not converge to optimal solution in some cases

- Best for: Deep learning, sparse gradients, most general-purpose optimization

Comparison Summary

| Optimizer | Memory | Convergence | Hyperparameters | Best Use Case |

|---|---|---|---|---|

| SGD | Minimal | Slow but stable | Learning rate only | Large datasets, limited memory |

| Momentum | Low | Faster than SGD | LR + momentum | Non-convex problems |

| Adam | Higher | Fast, adaptive | LR + 2 betas | Deep learning, general purpose |

Practical Guidelines

When to Use SGD

- Large datasets where memory is limited

- Fine-tuning pre-trained models

- When you need reproducible results

- Simple convex optimization problems

When to Use Adam

- Training deep neural networks from scratch

- Sparse gradients or sparse data

- When you want good default performance

- Rapid prototyping and experimentation

Learning Rate Guidelines:

- SGD: Start with 0.1, reduce if training is unstable

- SGD + Momentum: Start with 0.01-0.1, momentum 0.9

- Adam: Start with 0.001, usually works well without tuning

Implementation in Popular Frameworks

PyTorch

import torch.optim as optim

# SGD

optimizer = optim.SGD(model.parameters(), lr=0.01)

# SGD with momentum

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.9)

# Adam

optimizer = optim.Adam(model.parameters(), lr=0.001)TensorFlow/Keras

from tensorflow.keras.optimizers import SGD, Adam

# SGD

optimizer = SGD(learning_rate=0.01)

# SGD with momentum

optimizer = SGD(learning_rate=0.01, momentum=0.9)

# Adam

optimizer = Adam(learning_rate=0.001)Key Takeaways

- Start with Adam for most deep learning tasks - it's robust and requires minimal tuning

- Use SGD with momentum for fine-tuning or when you need the best possible final performance

- Learning rate scheduling can improve convergence for all optimizers

- The optimizer choice matters less than proper architecture and data quality

- Experiment with different optimizers if training is unstable or convergence is poor

Leave comment

Comments

There are no comments at the moment.

Check out other blog posts

2025/07/07

Q-Learning: Interactive Reinforcement Learning Foundation

2025/07/05

Building a Japanese BPE Tokenizer: From Characters to Subwords

2024/06/19

Create A Simple and Dynamic Tooltip With Svelte and JavaScript

2024/06/17

Create an Interactive Map of Tokyo with JavaScript

2024/06/14

How to Easily Fix Japanese Character Issue in Matplotlib

2024/06/13

Book Review | Talking to Strangers: What We Should Know about the People We Don't Know by Malcolm Gladwell

2024/06/07

Most Commonly Used 3,000 Kanjis in Japanese

2024/06/07

Replace With Regex Using VSCode

2024/06/06

Do Not Use Readable Store in Svelte

2024/06/05

Increase Website Load Speed by Compressing Data with Gzip and Pako

2024/05/31

Find the Word the Mouse is Pointing to on a Webpage with JavaScript

2024/05/29

Create an Interactive Map with Svelte using SVG

2024/05/28

Book Review | Originals: How Non-Conformists Move the World by Adam Grant & Sheryl Sandberg

2024/05/27

How to Algorithmically Solve Sudoku Using Javascript

2024/05/26

How I Increased Traffic to my Website by 10x in a Month

2024/05/24

Life is Like Cycling

2024/05/19

Generate a Complete Sudoku Grid with Backtracking Algorithm in JavaScript

2024/05/16

Why Tailwind is Amazing and How It Makes Web Dev a Breeze

2024/05/15

Generate Sitemap Automatically with Git Hooks Using Python

2024/05/14

Book Review | Range: Why Generalists Triumph in a Specialized World by David Epstein

2024/05/13

What is Svelte and SvelteKit?

2024/05/12

Internationalization with SvelteKit (Multiple Language Support)

2024/05/11

Reduce Svelte Deploy Time With Caching

2024/05/10

Lazy Load Content With Svelte and Intersection Oberver

2024/05/10

Find the Optimal Stock Portfolio with a Genetic Algorithm

2024/05/09

Convert ShapeFile To SVG With Python

2024/05/08

Reactivity In Svelte: Variables, Binding, and Key Function

2024/05/07

Book Review | The Art Of War by Sun Tzu

2024/05/06

Specialists Are Dead. Long Live Generalists!

2024/05/03

Analyze Voter Behavior in Turkish Elections with Python

2024/05/01

Create Turkish Voter Profile Database With Web Scraping

2024/04/30

Make Infinite Scroll With Svelte and Tailwind

2024/04/29

How I Reached Japanese Proficiency In Under A Year

2024/04/25

Use-ready Website Template With Svelte and Tailwind

2024/01/29

Lazy Engineers Make Lousy Products

2024/01/28

On Greatness

2024/01/28

Converting PDF to PNG on a MacBook

2023/12/31

Recapping 2023: Compilation of 24 books read

2023/12/30

Create a Photo Collage with Python PIL

2024/01/09

Detect Device & Browser of Visitors to Your Website

2024/01/19

Anatomy of a ChatGPT Response